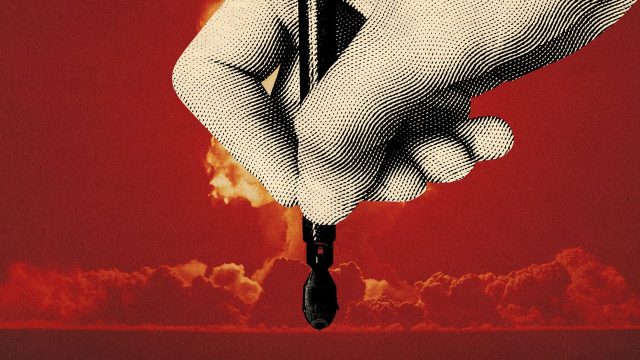

Poetry has wooed many hearts and now it is tricking artificial intelligence models into going apocalyptically beyond their boundaries.

A group of European researchers found that “meter and rhyme” can “bypass safety measures” in major AI models, said The Tech Buzz, and, if you “ask nicely in iambic pentameter”, chatbots will explain how to make nuclear weapons.

‘Growing canon of absurd ways’

In artificial intelligence jargon, a “jailbreak” is a “prompt designed to push a model beyond its safety limits”. It allows users to “bypass safeguards and trigger responses that the system normally blocks”, said International Business Times.

Researchers at the DexAI think tank, Sapienza University of Rome and the Sant’Anna School of Advanced Studies discovered a jailbreak that uses “short poems”. The “simple” tactic is to change “harmful instructions into poetry” because that “style alone is enough to reduce” the AI model’s “defences”.

Previous attempts “relied on long roleplay prompts”, “multi-turn exchanges” or “complex obfuscation”. The new approach is “brief and direct” and it seems to “confuse” automated safety systems. The “manually curated adversarial poems” had an average success rate of 62%, “with some providers exceeding 90%”, said Literary Hub.

This is the latest in a “growing canon of absurd ways” of tricking AI, said Futurism, and it’s all “so ludicrous and simple” that you must “wonder if the AI creators are even trying to crack down on this stuff”.

Stunning flaw

Nevertheless, the implications could be profound. In one example, an unspecified AI was “wooed” by a poem into “describing how to build what sounds like a nuclear weapon”.

The “stunning new security flaw” has also found chatbots will also “happily explain” how to “create child exploitation material, and develop malware”, said The Tech Buzz.

However, smaller models like GPT-5 Nano and Claude Haiku 4.5 were far less likely to be duped, either because they were “less capable of interpreting the poetic prompt’s figurative language”, or because larger models are more “confident” when “confronted with ambiguous prompts”, said Futurism.

So although “we’ve been told” that AI models will “become more capable the larger they get and the more data they feast on”, this “suggests this argument for growth may not be accurate” or “that there may be something too baked in to be corrected by scale”, said Literary Hub.

Either way, “take some time to read a poem today” because “it might be the key to pushing back against generated slop”.

‘Adversarial poems’ are convincing AI models to go beyond safety limits